How to choose the Linux distribution? [basic guide]

Linux is an operating system created in 1991 by a Finnish computer student who wanted to connect to the University’s Unix mainframe using his 386 PC.

At the time, the only portability of the Unix system for PCs was the educational operating system called MINIX.

MINIX was a minimal UNIX-based operating system created by renowned computer professor Andrew S. Tanenbaum, with the purpose of teaching how operating systems work. The licensing of MINIX at the time limited its use for educational purposes, apart from other limitations.

Initially, Torvalds relied on MINIX to write his own Kernel and basic apps, taking advantage of a lot of things from MINIX. Later, all of the code was replaced with components from the GNU Project.

Torvalds wanted his creation to be called “Freax”, a derivative of “Free”, “Freak” (Aberration) and the “X” of Uni x.

From the beginning, Linux was a sensation among programmers and technology lovers, distributed through FTP channels, discussion forums, and improved by several enthusiasts.

A few months after the public release of “Freax”, Ari Lemmke, Torvalds’s colleague, renamed the project “Linux” with Linus’ consent.

Today, the operating system created by Torvalds is widely used throughout the Internet infrastructure, on all supercomputers, on almost all cell phones through its embedded version called Android.

This is largely due to the licensing of the software adopted, the GNU GPL, which requires the free distribution of the source code, allows its improvement, and requires the free distribution of the new improved code.

Linux distributions

Because it is an open source operating system, Linux can be changed and customized by any person or company.

As a result, in the 90s, several amateur and business groups for commercial purposes emerged, which customized Linux, creating their own distributions.

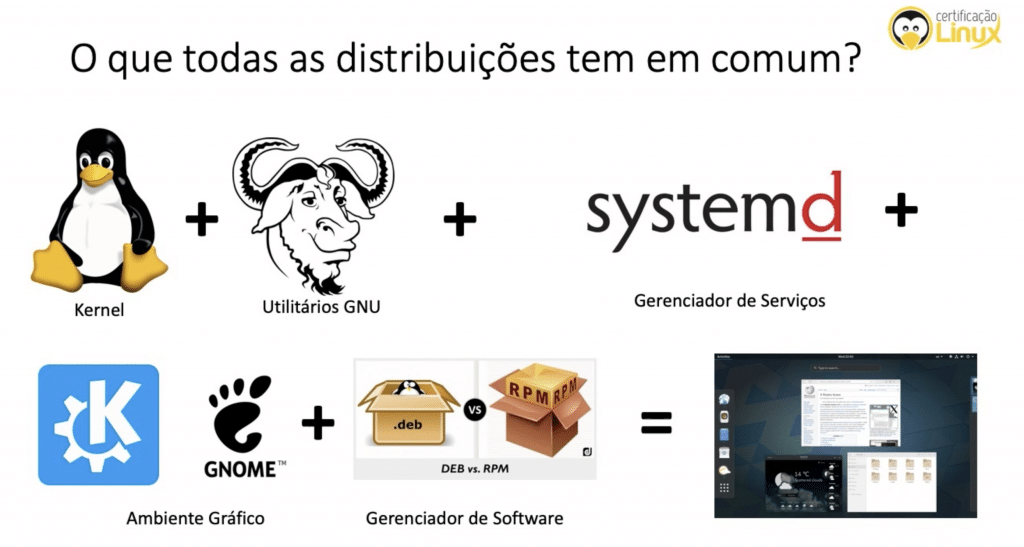

A Linux distribution consists of:

- Operating system core, called Kernel;

- GNU project utilities;

- Service manager: System V or Systemd;

- Graphical environment: KDE, Gnome, XFCE, etc.;

- Software manager.

Each Linux distribution can modify any of these components, customizing to the taste of the user group, or for a specific purpose, or well-defined application.

Each Linux distribution can modify any of these components, customizing to the taste of the user group, or for a specific purpose, or well-defined application.

That’s why there are end-user distributions for Desktop use, such as Fedora, Ubuntu and openSUSE, which use user-friendly tools, a robust graphical environment, and server-oriented distributions for the purpose of efficient use of computational resources, such as Red Hat, Fedora for Servers, SuSE, and CentOS.

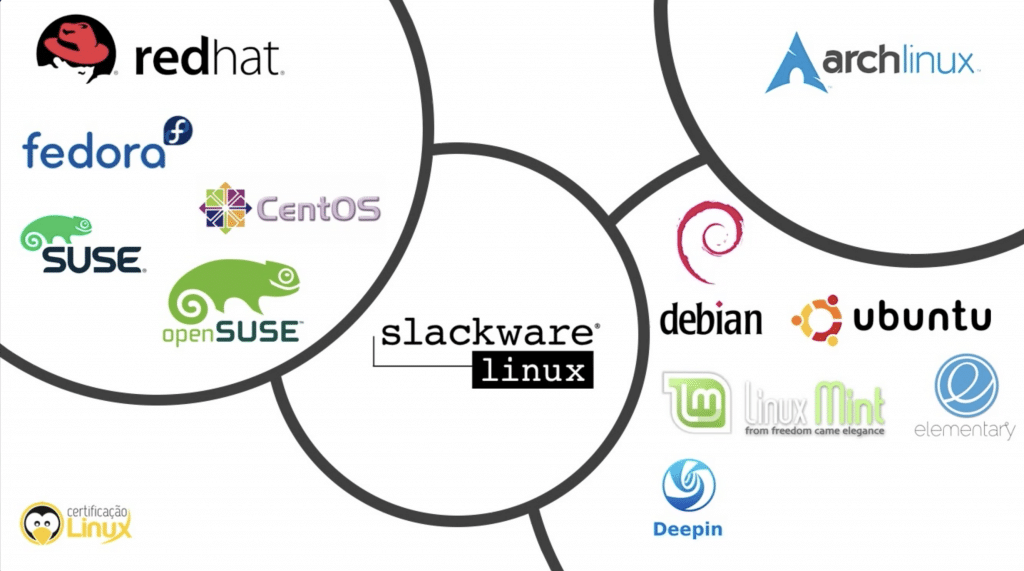

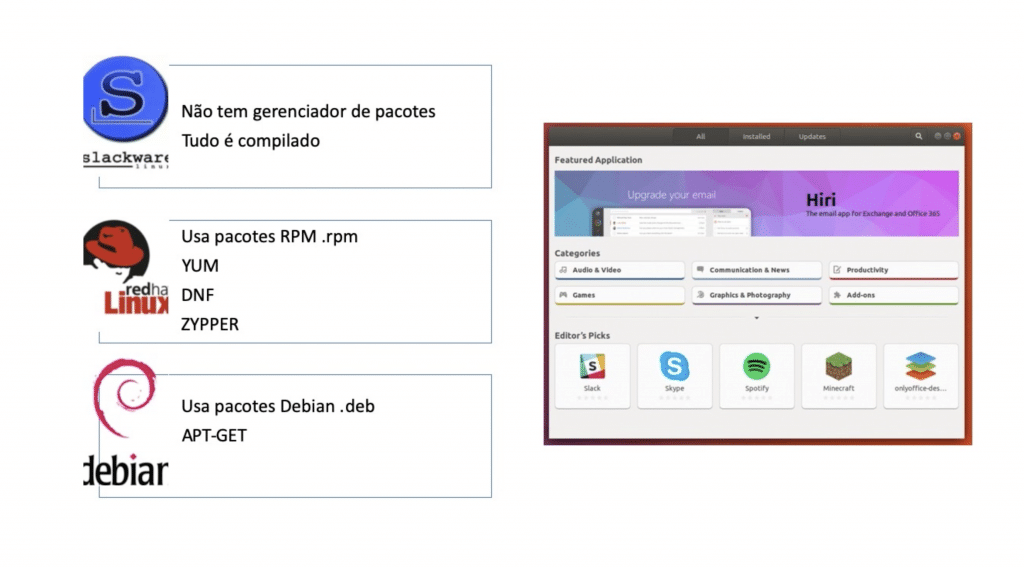

It is also possible to classify distributions by the distribution family to which they are part. Basically there are two major families: Debian and Red Hat. Precisely because they are the oldest stable distributions, almost all other distributions existing in 2020 are based on one of them.

Launched in 1993 by Ian Murdock, Debian is a Linux distribution with thousands of volunteers who shared Richard Stallman’s ideals regarding the freedom of users to use, distribute, and improve the software.

In 2004, Mark Shuttleworth and his team, with the objective of making Linux as friendly as possible for Desktop users, improved Debian, making the learning curve for new users as short as possible, and from that work he created the Ubuntu distribution.

In 1994, the first commercial company entirely based on Linux was created, called Red Hat Linux. Specifically aimed at business applications, its Linux is intended for use on servers, and its annual revenue of over one billion dollars is maintained through support contracts. In 2019, Red Hat was bought by IBM for 34 billion dollars. Not bad for a Free Software company.

Other distributions were born from Red Hat, such as Fedora, aimed at Desktops, and CentOS for servers. Fedora is maintained by Red Hat and adopts new technologies very quickly.

Another former Linux company, SUSE Linux, founded in 1992 in Germany, is a distribution known for its seriousness and also for its commercial Linux SUSE Enterprise for servers. In 2004, the company created openSUSE, a free and free version of Linux, open to external developers. SUSE Linux became known for its software management tool called YaST, which makes it much easier to install software, long before Debian and Red Hat created similar tools (apt and yum).

Embedded Systems

Embedded Systems

When it comes to embedded systems, Linux is also a champion, precisely because it has open source code, which facilitates adaptations and works on a wide range of processor architectures (x86, ARM, IA-64, m68k, MIPS, OpenRISC, PA-RISC, powerpc, s390, Sparc, etc.).

Embedded systems are equipment created for a specific function, but behind the scenes are a computer connected to specific interfaces. These can be mentioned: automotive multimedia, SmartTVs, autonomous cars, internet routers, medical instruments, industrial automation, navigation systems, etc.

Android

Without a doubt, with 2.5 billion active devices in 2019, Android is a very successful form of embedded Linux. Created in 2003, the company Android Inc. initially began developing a system to be used in digital cameras. In 2005, it was acquired by the giant Google and became the most used operating system on mobile devices.

Android uses a modified version of the Linux Kernel, specially designed to work with small touchscreen screens. The Android project also includes a series of proprietary software for Google apps, such as Calendar, Maps, Mail, and Navigator.

Raspberry Pi

The Raspberry Pi is a low-cost computer ($25), the size of a credit card, with Wifi, tickets USB, HDMI and sound. In addition, it has several input and output interfaces, known as GPIO, that can be connected to other electronic devices, extension boards, sensors, servo motors, and other hardware. The creators of the Raspberry customized a version of Debian to work on the Raspberry, called Raspbian.

The Raspberry is one of the vectors of the growth of embedded devices, due to its versatility and price.

Linux in the Cloud

The term cloud computing was coined to designate the consumption of computing resources in distributed data centers, in public or private clouds. In 2017, it is estimated that 90% of cloud applications run on Linux.

The major cloud computing players are: Amazon Web Services (AWS), Google Cloud Platform, Rackspace, Digital Ocean, and Microsoft Azure. All of them offer several versions of Linux in their infrastructures.

How to Choose the Operating System?

The choice of a computer’s operating system is generally made when purchasing the hardware, since a Desktop computer is hardly sold without an operating system today.

It is with this premise that Microsoft has strongly held the space for desktop computer operating systems compatible with the IBM PC for over 25 years.

Apple also provides its proprietary UNIX operating system on its computers, whether iMacs or MacBooks, called macOS. On its cell phones, the Cupertino giant also runs a variant of UNIX, called IOS.

It is in the server market where Linux flourished, and it has a large part of the Internet ecosystem and 100% of the world’s supercomputers.

Although manufacturers almost always ship the hardware with a pre-installed operating system, it is possible for the user to change the operating system to one of their liking and that complies with the necessary requirements.

Five years ago, installing Linux on a Notebook was experimental, and the distribution chosen was not always able to identify and make correct use of the hardware. But that has changed dramatically today. It is perfectly possible to install any major Linux distribution successfully on almost any notebook, without prejudice to any malfunction.

Linux has even been a wise choice to revive old notebooks, with lightweight distributions capable of running on old hardware.

How to Choose a Linux Operating System?

Linux can basically be divided into three categories: enterprise, consumer, and experimental.

Enterprise distributions may be free or commercial, generally involve a support contract, and provide a robust and thoroughly tested operating system for business applications and services.

The distributions in this category are:

- Red Hat

- CentOS

- SUSE Enterprise

- Debian

- Ubuntu LTS

- Fedora Server

There are end-consumer distributions aimed at Desktop computers, such as:

- Non-LTS Ubuntu (Lubuntu, Xubuntu, Kubuntu, etc)

- openSUSE

- Fedora Workstation

- Elementaty OS

- Zoring

- Linux Mint

- Deepin

There are still experimental distributions, for hackers and advanced users:

- Arch

- Gentoo

- Backtrack

- Kali

- PureOS

### Life Cycle

### Life Cycle

Depending on the application that Linux will run, it is important to consider the operating system’s support lifecycle.

Once the lifecycle of a software version is over, official developers rule out any support, updates, and bug fixes in that particular version. The user’s options then in this case are: make the corrections themselves, stay adrift, or upgrade the version.

The lifecycle of Linux distributions may vary by category, and also by distributor. Enterprise versions usually maintain active support for a version for 10 years. This is necessary because in business applications, changes are made cautiously and slowly. It is common today for hardware to lose its usefulness before the end of the software life cycle.

Consumer-oriented distributions maintain support through forums, usually on the manufacturer’s website, for a three-version release period, which can generally last around 5 years.

The experimental versions are generally also supported via Wiki, which are actively built, always on the move.

In addition, it is possible to find distributions that make beta versions of their systems available to the public to the public, which have not been extensively tested and may contain several bugs, and which do not enjoy maximum performance because they include debugging routines. After numerous tests and corrections, the manufacturer releases a “stable” version.

Graphic Desktops

In its inception, Linux was a simple shell in the Unix standard, and it was that way for a long time. It was something done by programmers for programmers, so being friendly and good-looking wasn’t an important requirement.

But 5 years ago, this narrative gave way to a beautiful operating system, with a variety of first-class graphic environments and applications, culminating in a complete Desktop experience, with everything an inexperienced user could have at hand to work, communicate, and have fun.

Without a doubt, one of the beauties of Linux is its plurality of graphical environments for all tastes, through numerous distributions and yet maintains the full compliance of being a standard operating system.

Over time, several graphical environments were built to work on the Linux window server.

Among them, we can mention the KDE, GNOME, XFCE and Cinnamon environments.

KDE

KDE has been a large community of developers since 1996 who have been developing a multiplatform graphical environment known as KDE Plasma. Its name derives from K Desktop Environment, and the letter “K” was chosen simply because it comes after “L” in Linux. KDE and its applications are written with the Qt framework.

The purpose of the KDE community is both to provide an environment that provides the basic applications and functionalities for everyday needs and to allow developers to have all the tools and documentation necessary to simplify the development of applications for the platform.

KDE is based on the principle of ease of use and customization. All the elements of the graphic environment can be customized: panels, window buttons, menus, and various elements such as clocks, calculators, and applets. The extreme flexibility to customize the appearance even allows themes to be shared by users.

The KWin window manager is responsible for providing an organized and consistent graphical interface, and intuitive taskbar.

| KDE Plasma with OSX Theme |

| KDE Plasma another OSX-style variation |

| Windows-style KDE Plasma |

GNOME (acronym for G NU N Network The Object Model E Environment) is an open source project for a graphic environment that is also multiplatform with a special emphasis on usability, accessibility, and internationalization.

The GNOME Project is maintained by several organizations and developers and is part of the GNU Project. Its main contributor and maintainer is Red Hat.

GNOME 3 is the standard graphic environment for major distributions such as Fedora, SUSE Linux, Debian, Ubuntu, Red Hat Enterprise, CentOS, and many others.

There is also a division of GNOME 2 known as MATE, since the change in the environment from GNOME 2 to GNOME 3 was large, and some users simply preferred to stick with the environment more similar to GNOME 2.

Created by two Mexicans in 1997, unhappy at the time with the lack of free software for the Qt Framework, used to develop applications for KDE.

Thus, they preferred to use the GTK (Gimp Tool Kit) as the standard framework for the development of GNOME, since it was already licensed by the GPL.

GNOME 1 and 2 followed the traditional “taskbar” desktop.

GNOME 3 changed this with the GNOME Shell, with an abstract environment where switching between different tasks and virtual desktops takes place in a separate area called “Overview”.

| GNOME 3 on CentOS |

| GNOME 3 on Ubuntu |

| Figure 6 - MATE on Linux Mint |

Xfce is a Desktop environment for Unix Like operating systems, such as Linux and BSD, founded in 1996.

Xfce aims to be light, fast, and visually appealing. It incorporates the Unix philosophy of modularity and reuse and consists of separate packaged parts that together provide all the functions of the work environment but can be selected in subsets to suit the user’s needs and preferences.

Like GNOME, Xfce is based on the GTK framework, but it’s an entirely different project.

It is widely used in distributions that are intended to be lightweight, specially designed to run on old hardware.

Xfce can be installed on several UNIX platforms, such as NetBSD, FreeBSD, OpenBSD, Solaris, Cygwin, MacOS X, etc.

Among the distributions that use Xfce, we can mention:

- Linux Mint Xfce edition

- Xubuntu

- Manjaro

- Arch Linux

- Linux Lite

| Xfce on Linux Mint |

| Xfce on Linux Manjaro |

Cinnamon is a graphical desktop project derived from GNOME 2, created by the folks at Linux Mint. Its user experience is very similar to Windows XP, 2000, Vista and 7, easing the learning curve for users who have migrated from these operating systems.

| Figure 9 - Cinnamon on Linux Mint |

It is important to say that even with a beautiful, practical and diverse graphical environment, the strength of Linux lies on the command line. For advanced users, it is in the operating system shell that the magic happens, because Linux allows the user to do whatever they want, with the ease of altering a file. The concatenation of commands also allows you to create true computational Swiss Army knives.

Access to the terminal is simple and fast. Just search for the Terminal application in the graphical environment, or press the Ctrl-Alt-F1… F2… F3.. key sequence to access the terminal.

Linux in Virtual Machines

It’s impossible to talk about a computing environment without talking about virtualization and containers. The old CPDs in companies gave way to cloud computing, with flexible environments.

The term cloud computing was first described in 1997 in documents from ARPAnet, which was the forerunner computer network of the Internet as we know it. It was designed to represent a large network of servers interconnected and geographically dispersed, so that each environment is self-sufficient and independent.

Popularized by large companies such as Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform, the term cloud computing is currently used to designate distributed computing, where computational resources are shared and distributed to complete a particular task or application.

Linux is perfectly adaptable to this type of computing environment, which seems to have been made for it, in a perfect symbiosis.

Despite having access to increasingly efficient and powerful hardware, operations performed on traditional physical servers - or bare-metal - inevitably face significant practical limits.

The cost and complexity of creating and launching a single physical server means that effectively adding or removing resources to quickly meet demands is difficult or, in some cases, impossible. Add up the cost of the server, with the cost of energy and cooling. Cost of an Internet link and also of the security of a good firewall.

Safely testing new configurations or complete apps before release can also be cumbersome, expensive, and time-consuming.

In this sense, computing evolved to provide virtual servers, which can be created or destroyed in a few seconds, or even have the capacity to rapidly increase and decrease their computing power.

This provides a unique differentiator for corporate applications, which must meet ever-changing business needs.

Machine virtualization involves the following premises:

- Software access to hardware resources and drivers indistinguishably from a non-virtualized experience;

- It must allow complete customer control over the virtualized system hardware.

- Allow physical computing, memory, network, and storage resources to be divided among various virtual entities.

- Each virtual device is represented in its software and user environments as a real, independent entity.

- Correctly configured, nearly isolated resources can provide more secure applications without visible connectivity between environments.

- Virtualization also allows new virtual machines to be provisioned and executed almost instantly and then destroyed as soon as they are no longer needed.

The kind of adaptability that virtualization offers even allows scripts to add or remove virtual machines in seconds… instead of the weeks it takes to purchase, provision, and deploy a physical server.

Container Virtualization

A VM is a complete operating system whose relationship with hardware resources (CPU, memory, storage, and network) is completely virtualized: it thinks it is running on its own computer.

A hypervisor installs a VM from the same ISO image that you would download and use to install an operating system directly onto an empty physical hard drive.

A container, on the other hand, is effectively an application, launched from a script-like model, that considers an operating system.

In container technologies (such as LXC and Docker), containers are nothing more than abstractions of software and resources (files, processes, users) that depend on the host kernel and a representation of the “four main” hardware resources (e.g. CPU, RAM, network, and storage).

A container, then, is a set of one or more processes organized in isolation from the system, with its own abstraction of disk, network, and shared libraries. It has all the files necessary to execute the processes for which it was created. However, containers use the Host Kernel of the instances where they run.

In practice, containers are portable and consistent throughout the migration between development, test, and production environments. These characteristics make them a much faster option than traditional development models, which rely on the replication of traditional test environments.

Because containers are effectively isolated extensions of the host kernel, they allow for incredibly lightweight and versatile computing opportunities.

DevOps and Containers

Containers are the perfect marriage between software development and infrastructure.

Containers allow you to replicate a developing production environment very easily, and vice versa. The entire environment created for the development of an application can be migrated in seconds to production with minimal effort. All configurations, libraries, dependencies, files, and locations go together with the application encapsulated in the container with maximum portability, configurability, and isolation.

Are containers VM’s?

Not exactly. The two technologies are complementary. With virtualization, it is possible to run operating systems (Windows or Linux) simultaneously on a single hardware system.

Containers, on the other hand, share the same kernel as the host operating system and isolate the application processes from the rest of the system. This provides isolation of the application without the need to install a full virtual machine. This way, “container instances” can be initialized, stopped, and restarted faster than a traditional VM

Think of containers as apartments in a building. Each family lives in a different apartment, with its wall color, furniture and appliances, but they share common areas such as entrance hall, elevators, gourmet space, sewage, water and gas network. Virtual machines, on the other hand, are like a condominium with several houses. Each house must have its own wall, garden, sewage system, heating, roof, etc. Houses will hardly have in common their architecture, number of rooms, size and configurations. In the condominium, the houses only share the street system, security and some infrastructure, but on the other hand, they are very independent.

Container Software

LXC

The LXC package is used to create containers, slightly heavier than an application container, but much lighter than a virtual machine. It contains its own simple operating system, which interfaces directly with the host operating system.

Because it contains its own operating system, LXC can sometimes be confused with a virtual machine, however, it still needs the host operating system to function.

Docker

The Docker package is also used to create containers. Developed by Docker, it has an enterprise version and another Community version. Extremely lightweight, it allows several containers to run on the same host operating system.

Docker (learn what Docker is) needs to run a service on the host operating system that manages the Docker images installed. It offers both a text-mode client to create and manage images, and a graphical interface client.

Container creation settings in Docker are written to a file in YAML format.

There is undoubtedly a migration from VMs to containers, precisely because of the ease of software delivery and compliance between development and production.

Conclusion

You’ll only know what your favorite version is if you try several. My favorite distro was openSUSE. Today I use Fedora Workstation on one notebook and on the other I am loving Zorin OS.

Learn much more about Linux with the Linux Certification online training. You can register here. If you already have an account, or want to create one, just log in or create your user here.

Did you like it?

Share